关于执行 python demo_gradio.py 时出现报错的

来源:11-3 1-3 在云服务器实操模型量化模型推理和服务

白聪聪

2023-11-22 14:58:58

问题描述:

老师您好,我按照视频的步骤操作,执行到 python demo_gradio.py 时出现报错。

操作步骤:

1、进入 llm_course 目录,执行命令:

conda activate /output/llm/llm_course/open-mmlab

激活虚拟环境。

2、执行命令:

pip install gradio==3.50.2

安装视频所示版本的 gradio

3、进入 llm_course 目录,创建软链接,执行命令:

ln -s /input0 /output/llm/llm_course/FlagAlpha-Llama2-Chinese-7b-Chat

4、进入 demo 目录,执行:

python demo_gradio.py

5、出现本次问题的报错。

相关截图:

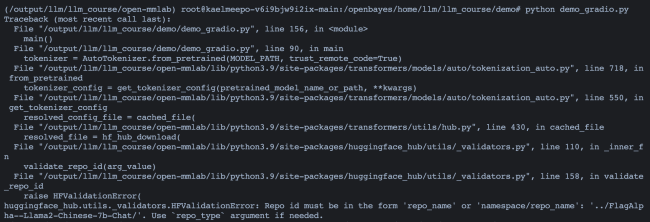

报错内容:

(/output/llm/llm_course/open-mmlab) root@kaelmeepo-v6i9bjw9i2ix-main:/openbayes/home/llm/llm_course/demo# python demo_gradio.py Traceback (most recent call last): File "/output/llm/llm_course/demo/demo_gradio.py", line 156, in <module> main() File "/output/llm/llm_course/demo/demo_gradio.py", line 90, in main tokenizer = AutoTokenizer.from_pretrained(MODEL_PATH, trust_remote_code=True) File "/output/llm/llm_course/open-mmlab/lib/python3.9/site-packages/transformers/models/auto/tokenization_auto.py", line 718, in from_pretrained tokenizer_config = get_tokenizer_config(pretrained_model_name_or_path, **kwargs) File "/output/llm/llm_course/open-mmlab/lib/python3.9/site-packages/transformers/models/auto/tokenization_auto.py", line 550, in get_tokenizer_config resolved_config_file = cached_file( File "/output/llm/llm_course/open-mmlab/lib/python3.9/site-packages/transformers/utils/hub.py", line 430, in cached_file resolved_file = hf_hub_download( File "/output/llm/llm_course/open-mmlab/lib/python3.9/site-packages/huggingface_hub/utils/_validators.py", line 110, in _inner_fn validate_repo_id(arg_value) File "/output/llm/llm_course/open-mmlab/lib/python3.9/site-packages/huggingface_hub/utils/_validators.py", line 158, in validate_repo_id raise HFValidationError( huggingface_hub.utils._validators.HFValidationError: Repo id must be in the form 'repo_name' or 'namespace/repo_name': '../FlagAlpha--Llama2-Chinese-7b-Chat/'. Use `repo_type` argument if needed.

请老师帮忙看下,感谢!

写回答

2回答

-

那位科技大模型算法

2024-03-18

已经私聊解决问题了

0 -

白聪聪

提问者

2023-11-22

老师已私聊回复解决方法,修改 demo_gradio.py 的 MODEL_PATH 数据集路径:

# MODEL_PATH = "../FlagAlpha--Llama2-Chinese-7b-Chat/" MODEL_PATH = "/openbayes/input/input0"

0

LLM大语言模型算法特训

从入门-案例实战-多领域应用-面试指导-推荐就业,匹配课前知识路线、详细学习笔记和全方位服务,助力学习与就业,快速实现职业跃迁。附赠价值2000元+的大模型项目代码/数据和配套环境和GPU。

239 学习 · 28 问题

相似问题